The Definitive 2025 Guide to FMEA, Mastering Proactive Risk Management

In a world of escalating complexity, from autonomous vehicles to intelligent medical devices, the cost of failure has never been higher. Proactively identifying and mitigating risk is no longer a best practice; it is a fundamental requirement for survival and success. Failure Mode and Effects Analysis (FMEA) is a systematic, team-driven tool designed to foresee potential failures in products and processes before they ever occur.

The Strategic Imperative of FMEA: From Cost Center to Competitive Advantage

For too long, many organizations have viewed risk management activities like FMEA as a mere cost center or a box to be checked for regulatory compliance. This perspective is dangerously outdated. When implemented correctly, FMEA is a powerful strategic tool that directly enhances profitability, protects brand reputation, and drives significant return on investment (ROI). The core principle is simple but profound: preventing a failure is exponentially cheaper than correcting one.

The greatest leverage of FMEA is found in the earliest stages of the development lifecycle. Addressing a potential design flaw on a digital drawing board costs pennies compared to the millions required for a post-launch product recall.

This financial reality is quantified by the “Cost of Poor Quality” (COPQ), a metric that captures all expenses related to process and product failures, including rework, scrap, warranty claims, and lost sales. For a company with $100 million in revenue, the difference between an efficient operation with a 1% COPQ ($1 million) and a less efficient one with a 5% COPQ ($5 million) is a staggering $4 million in annual costs that could have been invested in growth and innovation. FMEA is one of the most effective methodologies for systematically driving down COPQ.

The financial argument becomes even more compelling when considering the lifecycle cost of defects. Research demonstrates that addressing a problem during the operations or post-launch phase can be at least 29 times more costly than fixing it during the initial design phase. This is not just about manufacturing costs; it includes the immense expense of recalls, litigation, and reputational damage. Case studies have validated the substantial ROI of FMEA, particularly in complex fields like software development, where the return has been estimated at 10x to 40x in cost avoidance for every dollar invested in the analysis. One model calculated the cost of a single defect found by a customer to be as high as $70,000.

Ultimately, these financial metrics—COPQ, ROI, and lifecycle cost—provide a bridge between the engineering department and the C-suite. They translate the technical work of identifying failure modes into the language of business impact. A successful FMEA program is one that can articulate its findings not just in terms of risk scores, but in terms of margin protection, cost savings, and enhanced customer satisfaction.

The Genesis and Evolution of a Resilient Tool: A History Forged in High-Stakes Environments

To understand the power of FMEA, one must appreciate its origins. It is not an academic theory but a practical tool forged in environments where the consequences of failure were absolute. Its history reveals a continuous adaptation to the most complex technological challenges of the era, cementing its credibility as a premier risk management methodology.

From Munitions to the Moon

The formal concept of FMEA emerged from the crucible of military necessity in the late 1940s. The U.S. military, dealing with increasingly powerful and complex munitions, needed a structured way to anticipate and prevent malfunctions before they could have catastrophic consequences. This led to the creation of the first documented military procedure, MIL-P-1629, in 1949, which laid the foundation for the FMEA process we know today.

FMEA’s next great leap came with the space race. In the 1960s, NASA and its contractors heavily adopted FMEA and its more detailed counterpart, Failure Mode, Effects, and Criticality Analysis (FMECA), for the Apollo program. For a mission involving costly, limited-production spacecraft and the lives of astronauts, a reactive “trial and error” approach was unthinkable. FMEA provided the proactive framework needed to analyze the unprecedented complexity of the technology and mitigate risks before they could jeopardize the mission and the nation’s reputation.

The Automotive Revolution and Global Standardization

While born in government programs, FMEA’s transition into a global commercial standard was driven by the automotive industry. In the late 1970s, Ford Motor Company began championing FMEA, largely in response to rising safety concerns and the massive liability costs associated with design failures, famously highlighted by the Ford Pinto case. This marked a pivotal moment, proving FMEA’s value in mass production environments where safety and reliability were paramount.

As supply chains became increasingly global and complex in the 1990s, a standardized language for risk was needed. Automotive industry bodies, including the Automotive Industry Action Group (AIAG) in the U.S. and the Verband der Automobilindustrie (VDA) in Germany, developed and published their own FMEA reference manuals. This standardization was critical for ensuring consistent quality and risk management across thousands of suppliers worldwide. From these roots, FMEA’s application has expanded into nearly every industry imaginable, including healthcare, software development, energy, and even agriculture, proving the universal applicability of its logic.

The history of FMEA is not merely a timeline of events; it is a map of how our perception of risk evolves with technology. FMEA was first applied where complexity and consequence were highest: military hardware. As consumer products like cars became more complex and society’s expectation for safety grew, FMEA moved into the commercial sector. As businesses globalized, it evolved into a standard for managing supply chain risk. Today, as complexity resides in software, AI, and interconnected mechatronic systems, FMEA is evolving once again to meet the challenge.

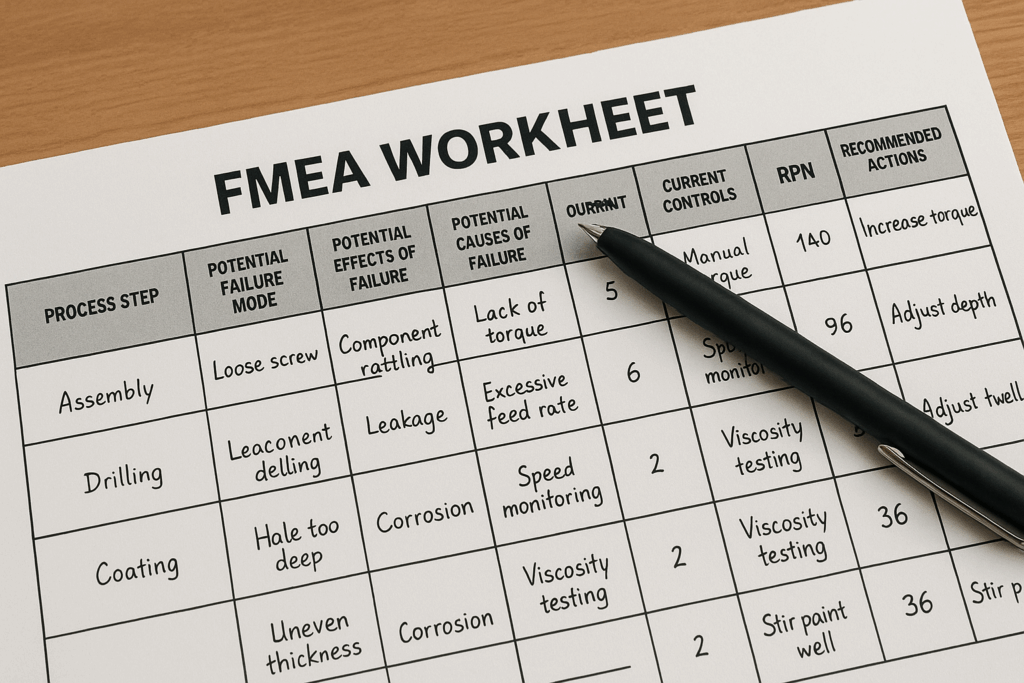

The FMEA Framework: A Disciplined Approach to Foreseeing Failure

At its heart, FMEA is a structured, disciplined process for answering a series of fundamental questions: What could go wrong? What would the consequences be? What could cause it to go wrong? And what can we do to prevent it? The modern approach, harmonized in the AIAG-VDA FMEA Handbook, provides a robust 7-step process that is a significant improvement over older, form-based methods.

The 7-Step Process

The AIAG-VDA methodology guides a cross-functional team through a logical progression of analysis and optimization :

- Planning & Preparation: Define the scope of the analysis (e.g., a new product design, a specific manufacturing process), assemble the team, and gather necessary documents like diagrams and past failure data.

- Structure Analysis: Break down the system or process into its constituent parts or steps. This creates a clear hierarchy for the analysis.

- Function Analysis: For each element identified in the structure analysis, define its intended function or purpose.

- Failure Analysis: Brainstorm the potential Failure Modes (how a function could fail), the potential Effects (the consequences of that failure), and the potential Causes (the specific mechanisms that lead to the failure).

- Risk Analysis: Evaluate each failure chain using the three lenses of risk: Severity (S), Occurrence (O), and Detection (D).

- Optimization: Based on the risk analysis, develop and implement actions to mitigate the highest-priority risks.

- Results Documentation: Document the entire process, including the actions taken and the resulting reduction in risk. This becomes a valuable knowledge base for the organization.

The Three Lenses of Risk: Severity, Occurrence, and Detection

The risk analysis step hinges on evaluating each potential failure through three distinct lenses, each rated on a 1-to-10 scale :

- Severity (S): How serious is the ultimate effect of the failure on the end customer? A rating of 1 is insignificant, while a 10 is catastrophic, potentially involving a safety hazard without warning. This rating is determined without regard to how often the failure might happen or how easily it can be detected.

- Occurrence (O): How likely is the cause of the failure to occur? A rating of 1 means the cause is extremely unlikely, while a 10 means it is virtually inevitable. This rating is based on historical data, process capability, and engineering judgment.

- Detection (D): How well can existing controls (e.g., inspections, tests) detect the cause or the failure mode before the product reaches the customer? A rating of 1 means the control is certain to detect the problem, while a 10 means there is no control in place and no chance of detection.

From Flawed RPN to Logical Action Priority (AP)

For decades, these three ratings were multiplied to calculate a Risk Priority Number (RPN), where RPN=S×O×D. The idea was to prioritize the failure modes with the highest RPN scores. However, this method has several critical flaws :

- It gives Severity equal weight to Occurrence and Detection. A failure with a catastrophic severity (S=10) should always demand attention, even if its RPN is low.

- Different risk profiles can result in the same RPN. A high-severity, low-occurrence failure can have the same RPN as a low-severity, high-occurrence failure, masking the true priority.

- It encourages a “numbers game.” Teams can be tempted to lower a high RPN simply by improving detection (lowering the D score), which is the weakest form of risk mitigation, instead of addressing the root cause or the severity of the effect.

Recognizing these limitations, the harmonized AIAG-VDA standard replaced RPN-based prioritization with Action Priority (AP) tables. Instead of multiplying the numbers, the AP method uses the combination of S, O, and D ratings to look up a priority level: High (H), Medium (M), or Low (L). This new logic ensures that high-severity issues are given the attention they deserve. Any failure with a Severity of 9 or 10 will almost always result in a High or Medium AP, compelling the team to take action. This shift represents a return to sound engineering principles, focusing the team on solving the most significant problems rather than simply lowering a calculated number.

The following table illustrates why this change is so critical.

| Analysis Item | Failure Mode A: High-Severity Risk | Failure Mode B: Low-Severity Risk |

| System/Component | Automotive Brake System | Automotive Interior |

| Potential Failure Mode | Brake line corrodes and leaks fluid | Interior trim panel rattles |

| Potential Effect | Complete loss of braking ability | Annoying noise for driver |

| Severity (S) | 10 (Catastrophic safety issue) | 4 (Minor nuisance) |

| Potential Cause | Improper corrosion coating on brake line | Clip holding panel is loose |

| Occurrence (O) | 2 (Low likelihood) | 5 (Occasional) |

| Detection (D) | 4 (Moderately detectable in testing) | 4 (Moderately detectable in testing) |

| RPN (S×O×D) | 80 | 80 |

| Action Priority (AP) | High (H) | Low (L) |

| Conclusion | Under the old RPN system, these two vastly different risks appear equal. The AP system correctly identifies Failure Mode A as a high priority that must be addressed, while Failure Mode B is a low priority. |

This example clearly shows that the move from RPN to AP is not a minor tweak but a fundamental improvement that aligns the FMEA process with logical risk management, ensuring that the most dangerous potential failures are never ignored.

The Next Horizon: The Future of FMEA

FMEA is not a static methodology; it is continuously evolving to address the risks posed by modern technology. The next frontier for FMEA involves moving beyond simple prevention to active monitoring of intelligent systems and leveraging artificial intelligence to create a dynamic, continuous risk intelligence process.

FMEA-MSR: The Guardian for Intelligent Systems

For today’s complex mechatronic systems, such as those in autonomous vehicles or advanced medical devices, it is sometimes impossible to completely design out all potential failures. For these situations, the AIAG-VDA handbook introduced a supplement to DFMEA called FMEA for Monitoring and System Response (FMEA-MSR). FMEA-MSR focuses on the system’s ability to

monitor itself for a failure during operation and then initiate a response to achieve a safe state within a specified time.

Consider an automotive airbag system. A traditional DFMEA would analyze the failure of a crash sensor. An FMEA-MSR, however, analyzes the diagnostic system that supervises that sensor.

- Failure Mode (in FMEA-MSR): “Diagnostic system fails to detect that a primary crash sensor has become unresponsive.”

- Effect: “In the event of a crash, the airbag fails to deploy.” (Severity = 10).

- Analysis Focus: The analysis then shifts to improving the monitoring and response capabilities. Can the system use other sensors (e.g., accelerometers) to validate the crash sensor’s status? Can it alert the driver with a warning light that the safety system requires service? FMEA-MSR is crucial for ensuring the functional safety of intelligent systems that must manage their own failures in real time.

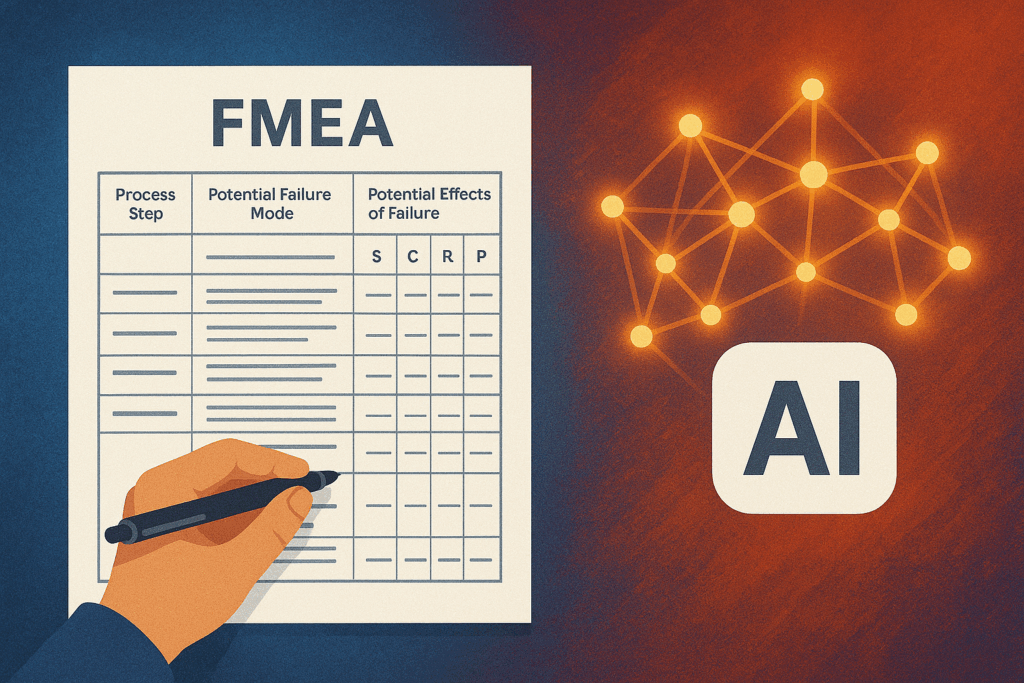

The AI Co-Pilot: Augmenting FMEA with Machine Learning

The traditional FMEA process, while effective, is manual, time-consuming, and limited by the knowledge and experience of the team members. Artificial Intelligence (AI) and Machine Learning (ML) are set to revolutionize this process, transforming it from a periodic, labor-intensive event into a continuous, data-driven activity.

Recent academic and industry studies show several promising applications:

- Automated Risk Prediction: ML models can be trained on vast amounts of historical FMEA and field failure data to automatically predict risk levels (like AP ratings) for new designs or processes. This can dramatically accelerate the initial analysis, allowing human experts to focus on the highest-risk areas. One study demonstrated a Random Forest Classifier that could predict failure risk classifications with 82.9% accuracy.

- Natural Language Processing (NLP) for Insight Mining: This is perhaps the most transformative application. Modern systems generate massive amounts of unstructured text data—customer reviews, technician service logs, social media comments, and internal reports. It is impossible for a human team to manually review all this data. NLP algorithms, however, can. A groundbreaking case study from Intel showed how they used NLP and sentiment analysis to analyze manufacturing tool logs. Their AI-powered system performed the FMEA in seconds, a task that previously took weeks of engineering effort. The AI found all the failure modes the human team had identified, plus additional ones they had missed.

This convergence of FMEA-MSR, real-time data from IoT sensors, and the analytical power of AI marks a paradigm shift. FMEA is evolving from a static spreadsheet created at a single point in time into a living, dynamic system of Continuous Risk Intelligence. The “FMEA of the future” will likely be a real-time dashboard that continuously monitors a product fleet or manufacturing line, with AI automatically detecting new failure patterns, updating risk profiles, and flagging emerging threats for human engineers to investigate. This transforms FMEA from a historical record into a truly predictive, adaptive, and intelligent risk management system.

Implementing FMEA: A Call to Proactive Leadership

Failure Mode and Effects Analysis is one of the most powerful tools available for building robust products, reliable processes, and resilient organizations. Its value has been proven for over 70 years, from ensuring the success of the Apollo missions to safeguarding patients in modern hospitals. The evolution to the Action Priority model and the integration of AI promise to make it even more effective in the years to come.

However, the success of FMEA hinges on more than just understanding the methodology. The greatest risks to a successful FMEA program are not technical but organizational. For FMEA to deliver on its promise of significant ROI and enhanced quality, several factors are critical:

The Cross-Functional Team: An FMEA conducted by a single person or department in isolation is an FMEA destined to fail. The analysis requires the collective wisdom and diverse perspectives of a team comprising members from design, manufacturing, quality, testing, maintenance, and even sales and customer service.

Leadership Commitment: A thorough FMEA requires a significant investment of time from an organization’s most valuable experts. These resources will only be allocated if leadership understands and champions the process. This requires moving the conversation from technical risk scores to business impact—reduced Cost of Poor Quality, higher ROI, and protected brand equity.

A Living Document: FMEA is not a “one and done” activity. It must begin at the earliest conceptual stages of a project and be continuously reviewed and updated as designs change, processes are modified, or new information from the field becomes available.

Ultimately, the most common failure mode of FMEA is a lack of organizational commitment. When leadership fails to provide the time, resources, and cultural support for a robust analysis, the result is a “checkbox” exercise that produces paperwork but no real value. The call to action, therefore, is for leaders to embrace a culture of proactive risk management. By empowering their teams to execute FMEA with the discipline and resources it requires, they are not just preventing failures; they are making a strategic investment in the long-term quality, safety, and profitability of their enterprise.

Source: https://thebulklab.com